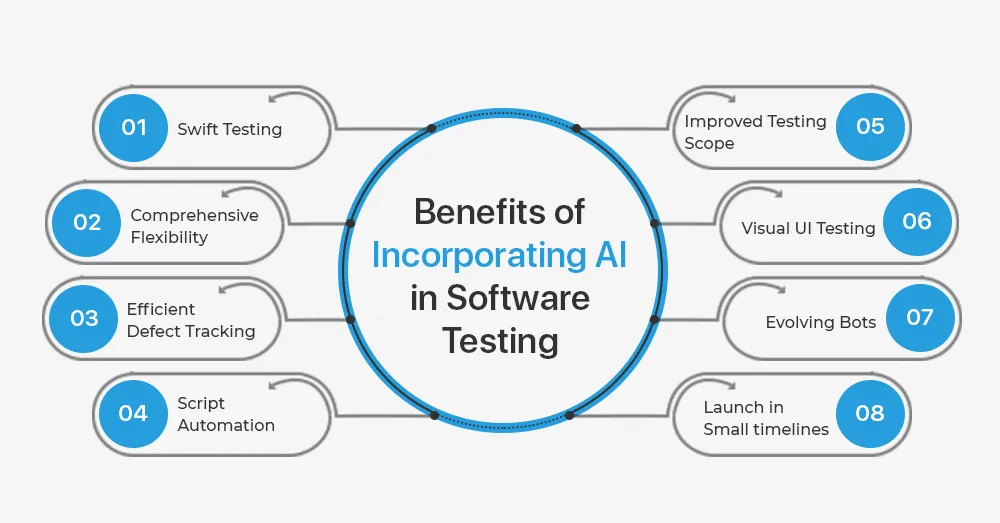

Artificial Intelligence (AI) has made a significant impact across various industries, and software testing is no exception. By integrating AI into software testing, organisations can enhance the efficiency, accuracy, and effectiveness of their testing processes. In this blog, we’ll explore the transformative role of AI in software testing and how it can help testers achieve better results.

AI as an Accelerator in Software Testing

One of the most significant benefits of AI in software testing is its ability to act as an accelerator. AI-powered tools can automate repetitive tasks, analyse vast amounts of data, and provide insights that would be difficult, if not impossible, for humans to achieve on their own. This allows testers to focus on more complex and creative aspects of testing, ultimately improving the overall quality of the software.

Automating Test Case Generation

AI can streamline the generation of test cases by analysing user stories and requirements to create comprehensive test scenarios. By leveraging natural language processing (NLP) and machine learning algorithms, AI can understand the context and intent behind user stories, generating relevant test cases that cover a wide range of scenarios. This not only saves time but also ensures that test cases are thorough and cover all critical aspects of the software.

Enhancing Code Generation and Maintenance

AI can assist in code generation and maintenance by analysing existing codebases and identifying patterns and best practices. This can help developers write cleaner, more efficient code and reduce the likelihood of introducing bugs. Additionally, AI can continuously monitor the codebase for changes and automatically update test scripts to reflect these changes, ensuring that tests remain relevant and up-to-date.

Improving Test Execution and Analysis

AI can optimise test execution by prioritising test cases based on their likelihood of uncovering defects. By analysing historical test data and usage patterns, AI can identify which areas of the software are most likely to contain bugs and focus testing efforts accordingly. This targeted approach not only improves the efficiency of the testing process but also increases the chances of finding critical defects early.

Furthermore, AI can enhance the analysis of test results by quickly identifying patterns and anomalies that might indicate potential issues. This allows testers to pinpoint the root cause of defects more efficiently and take corrective action faster.

Autonomous Testing

Autonomous testing is an emerging trend that leverages AI to create and execute tests with minimal human intervention. By analysing real user data and usage patterns, AI can automatically generate test cases that reflect actual usage scenarios. This ensures that tests are realistic and relevant, improving the accuracy of test results.

Visualising User Journeys

AI can provide visual representations of user journeys, highlighting how users interact with the software in real-world scenarios. These visualisations help testers identify critical workflows and prioritise testing efforts accordingly. By focusing on high-impact areas, testers can ensure that the most important aspects of the software are thoroughly tested.

Shifting Left and Right in Testing

AI supports both shift-left and shift-right testing approaches. In shift-left testing, AI can assist in creating test cases early in the development process, even before the system under test is fully developed. This proactive approach helps identify potential issues early, reducing the cost and effort required to fix them.

In shift-right testing, AI can analyse real user data from production environments to identify areas of risk and generate test cases accordingly. This ensures that testing remains aligned with actual usage patterns, improving the relevance and effectiveness of tests.

Embracing AI in Software Testing

While some may be sceptical about the integration of AI in software testing, it’s essential to approach it with an open mind. AI should be viewed as a tool that enhances human capabilities rather than a replacement for human testers. By embracing AI, testers can offload repetitive tasks, gain valuable insights, and focus on adding value through their domain expertise and critical thinking.

Enhancing Collaboration

AI-powered testing tools can benefit the entire development team, not just testers. By providing insights into system behaviour and identifying areas of risk, AI fosters collaboration and unifies the team. Business users, developers, and testers can all leverage AI-generated insights to make informed decisions, ultimately improving the quality and reliability of the software.

Code Implementation:

Python Code Testing with OpenAI: Let’s dive into an example of how AI can be integrated into software testing by using OpenAI to generate and analyse test cases for Python code.

Overview

The following example demonstrates a Streamlit application that allows users to input Python code and either write their own test cases or generate them using OpenAI. The application then runs the tests and provides analysis using OpenAI.

Code Breakdown

1. Importing Libraries and Setting Up API Key This section imports necessary libraries and sets up the OpenAI API key.

from openai import OpenAI

import streamlit as st

import tempfile

import subprocess

import os

import re

# Set your OpenAI API key

client = OpenAI(api_key="api_key")

2. Running Python Tests This function runs the provided Python code and test cases using pytest and captures the output and errors.

def run_python_tests(code, test_cases):

results = {}

with tempfile.TemporaryDirectory() as tmpdirname:

code_file = os.path.join(tmpdirname, 'user_code.py')

with open(code_file, 'w') as f:

f.write(code)

test_file = os.path.join(tmpdirname, 'test_user_code.py')

with open(test_file, 'w') as f:

f.write(test_cases.replace("from code import", "from user_code import"))

result = subprocess.run(['pytest', test_file], capture_output=True, text=True)

results['output'] = result.stdout

results['errors'] = result.stderr

return results

3. Generate Test Cases with OpenAI This function generates pytest test cases for the provided code using OpenAI’s GPT model.

def generate_test_cases_with_openai(code):

messages =

[

{"role": "system", "content": "You are a helpful assistant for generating Python test cases."},

{"role": "user", "content": f"Generate pytest test cases for the following Python code:nn{code},

also import modules of the code from user_code, don't use any other random names.

generate only pytest test case code, don't give any other text or answers"}

]

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

max_tokens=1000

)

response_text= response.choices[0].message.content

print(response_text)

# Extract code block using regular expression

match = re.search(r"```python(.*?)```", response_text, re.DOTALL)

if match:

test_cases = match.group(1).strip()

else:

test_cases = ""

return test_cases

4. Analyzing Code with OpenAI This function uses OpenAI to analyse the provided code and test cases, providing insights and suggestions for improvement.

def analyze_code_with_openai(code, test_cases):

messages = [

{"role": "system", "content": "You are a helpful assistant for analysing Python code and test cases."},

{"role": "user", "content": f"Analyse the following Python code and its corresponding test

cases:nnCode:n{code}nnTest Cases:n{test_cases}

nnProvide insights, potential issues, and suggestions for improvement."}

]

response = client.chat.completions.create(

model="gpt-4o",

messages=messages,

max_tokens=1000)

return response.choices[0].message.content

5. Streamlit Application Interface

This section creates the Streamlit interface for the application, allowing users to input their code, choose how to provide test cases, run tests, and analyse the results.

st.title('Python Code Testing with OpenAI')

st.write("""

This is a simple web application to test Python code using Streamlit and analyse it with OpenAI.

Enter your Python code and choose whether to write your own test cases or generate them using AI.

""")

code = st.text_area("Enter your Python code here:", height=200)

test_case_option = st.radio(

"How would you like to provide test cases?",

('Manual', 'AI Generated')

)

test_cases = st.session_state.get('test_cases', '')

if test_case_option == 'Manual':

test_cases = st.text_area("Enter your test cases here:", test_cases, height=200)

else:

if st.button("Generate Test Cases"):

if code:

with st.spinner("Generating test cases with OpenAI..."):

test_cases = generate_test_cases_with_openai(code)

st.session_state.test_cases = test_cases

st.text_area("Generated Test Cases:", test_cases, height=200)

else:

st.error("Please enter your Python code to generate test cases.")

if st.button("Run Tests and Analyze"):

if code and test_cases:

with st.spinner("Running tests..."):

results = run_python_tests(code, test_cases)

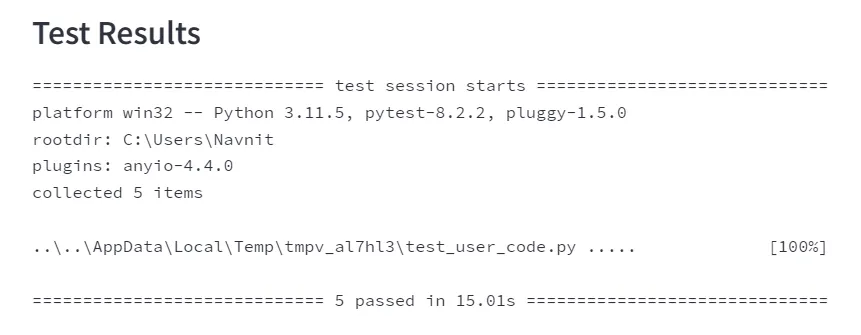

st.subheader("Test Results")

st.text(results['output'])

if results['errors']:

st.subheader("Errors")

st.text(results['errors'])

with st.spinner("Analysing code with OpenAI..."):

analysis = analyze_code_with_openai(code, test_cases)

st.subheader("AI Analysis")

st.text(analysis)

else:

st.error("Please enter both code and test cases to run the tests and analyse the code.")

Sample Python Code To Test:

This Python code defines a WebScraper class that uses multithreading to efficiently scrape web page titles from a list of URLs, handling retries and errors. It utilizes requests for fetching URLs and BeautifulSoup for parsing HTML, ensuring thread-safe operations with locks.

import requests

from bs4 import BeautifulSoup

import threading

import time

from queue import Queue

class RequestError(Exception):

pass

class WebScraper:

def __init__(self, base_url, max_threads=5, max_retries=3):

self.base_url = base_url

self.max_threads = max_threads

self.max_retries = max_retries

self.visited_urls = set()

self.url_queue = Queue()

self.results = []

self.lock = threading.Lock()

def fetch_url(self, url):

retries = 0

while retries < self.max_retries:

try:

response = requests.get(url, timeout=5)

response.raise_for_status()

return response.text

except requests.RequestException as e:

retries += 1

time.sleep(2 ** retries)

raise RequestError(f"Failed to fetch {url} after {self.max_retries} retries")

def parse_html(self, html):

soup = BeautifulSoup(html, 'html.parser')

title = soup.find('title').get_text()

return title

def worker(self):

while not self.url_queue.empty():

url = self.url_queue.get()

try:

html = self.fetch_url(url)

title = self.parse_html(html)

with self.lock:

self.results.append((url, title))

except RequestError as e:

with self.lock:

print(e)

finally:

self.url_queue.task_done()

def start_scraping(self, urls):

for url in urls:

if url not in self.visited_urls:

self.visited_urls.add(url)

self.url_queue.put(url)

threads = []

for _ in range(self.max_threads):

thread = threading.Thread(target=self.worker)

thread.start()

threads.append(thread)

self.url_queue.join()

for thread in threads:

thread.join()

return self.results

if __name__ == "__main__":

scraper = WebScraper(base_url="http://books.toscrape.com")

urls = [

"http://books.toscrape.com/catalogue/page-1.html",

"http://books.toscrape.com/catalogue/page-2.html",

"http://books.toscrape.com/catalogue/page-3.html",

]

results = scraper.start_scraping(urls)

for url, title in results:

print(f"{url}: {title}")

AI Generated Test Case

These test cases for the WebScraper class verify the correct functionality of fetching URLs, parsing HTML, and handling multiple URLs using mocked requests to simulate different responses. They ensure the scraper accurately processes and retrieves page titles and handles errors appropriately.

import pytest

import requests

from unittest.mock import patch

from bs4 import BeautifulSoup

from queue import Queue

from user_code import WebScraper, RequestError,requests

# Helper function to mock requests.get

def mock_requests_get(url, timeout):

if 'page-1.html' in url:

return MockResponse('Page 1 ', 200)

elif 'page-2.html' in url:

return MockResponse('Page 2 ', 200)

elif 'page-3.html' in url:

return MockResponse('Page 3 ', 200)

else:

raise requests.RequestException("Mocked exception")

# Mock response object

class MockResponse:

def __init__(self, text, status_code):

self.text = text

self.status_code = status_code

def raise_for_status(self):

if self.status_code != 200:

raise requests.RequestException("Mocked error")

@pytest.fixture

def scraper():

return WebScraper(base_url="http://books.toscrape.com")

@patch('requests.get', side_effect=mock_requests_get)

def test_fetch_url_success(mock_get, scraper):

url = "http://books.toscrape.com/catalogue/page-1.html"

response = scraper.fetch_url(url)

assert response == 'Page 1 '

@patch('requests.get', side_effect=mock_requests_get)

def test_fetch_url_failure(mock_get, scraper):

url = "http://books.toscrape.com/catalogue/page-4.html"

with pytest.raises(RequestError):

scraper.fetch_url(url)

def test_parse_html(scraper):

html = 'Test Page '

title = scraper.parse_html(html)

assert title == 'Test Page'

@patch('requests.get', side_effect=mock_requests_get)

def test_worker(mock_get, scraper):

urls = [

"http://books.toscrape.com/catalogue/page-1.html",

"http://books.toscrape.com/catalogue/page-2.html",

]

for url in urls:

scraper.url_queue.put(url)

scraper.worker()

assert len(scraper.results) == 2

assert scraper.results[0] == (urls[0], 'Page 1')

assert scraper.results[1] == (urls[1], 'Page 2')

@patch('requests.get', side_effect=mock_requests_get)

def test_start_scraping(mock_get, scraper):

urls = [

"http://books.toscrape.com/catalogue/page-1.html",

"http://books.toscrape.com/catalogue/page-2.html",

"http://books.toscrape.com/catalogue/page-3.html",

]

results = scraper.start_scraping(urls)

assert len(results) == 3

assert results[0] == (urls[0], 'Page 1')

assert results[1] == (urls[1], 'Page 2')

assert results[2] == (urls[2], 'Page 3')

Results of AI Generated Test Case

Demo

Conclusion

The integration of AI in software testing is transforming the way testing is conducted, providing tools and techniques that enhance efficiency, accuracy, and collaboration. By viewing AI as an accelerator and embracing its potential, testers can focus on high-value tasks, improve test coverage, and deliver better software faster. The future of software testing is undoubtedly AI-augmented, and those who embrace this technology will be well-positioned to achieve testing excellence.