Model Context Protocol (MCP) is an open protocol developed by Anthropic that is poised to remodel how AI systems interact with external tools and data. MCP is an open standard designed to create secure, two-way connections between AI-powered applications and external data sources. In simple terms, developers can either expose their business data through MCP servers or build AI applications (MCP clients) that connect to these servers allowing for real-time, context-rich interactions without custom integrations. Since its introduction in late 2024, MCP has been adopted by major tech companies, including OpenAI, Microsoft, and AWS, with some estimates suggesting most organizations will use MCP by the end of 2025.

In this blog, we’ll dive into what MCP is, how it works, why top enterprises are adopting it, and how it’s shaping the future of AI applications and AI agents, including the latest enterprise features and security updates.

What is MCP?

MCP, or Model Context Protocol, is an open protocol designed to standardize how AI applications and agents interact with external systems, tools, and data sources. Think of it as a universal language that allows AI models to seamlessly integrate with databases, CRMs, file systems, and more, without requiring custom implementations for each integration.

- Prompts: Predefined templates for common interactions.

- Tools: Functions that the model can invoke to perform tasks like reading, writing, or updating data.

- Resources: Data exposed to the application, such as files, images, or JSON structures.

MCP draws inspiration from existing protocols like APIs (for web app interactions) and Language Server Protocol (LSP) (for IDEs and coding tools). However, it takes these concepts further by creating a standardized layer specifically for AI applications, enabling them to interact with external systems in a more intelligent and context-aware manner.

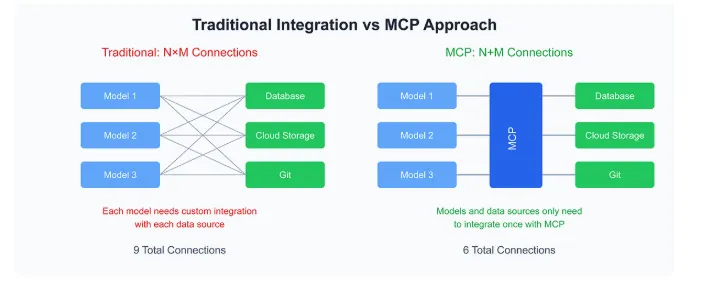

The protocol aims to eliminate the need for developers to write redundant custom integration code every time they need to link a new tool or data source to an AI system. Instead, MCP provides a unified method for all these connections, allowing developers to spend more time building features and less time on integration.

Enterprise-Grade MCP Adoptions:

Within months of its introduction, major technology platforms have embraced MCP as the universal standard for connecting AI assistants to external data sources and business systems.

- Anthropic Adopts MCP: In November 2024, Anthropic introduced MCP as an open-source standard, launching with immediate support in Claude Desktop and partnerships with development platforms like Replit and Sourcegraph. Users can connect Claude to content repositories, business tools, and development environments for contextual AI responses from live data.

- OpenAI Adoption: In March 2025, OpenAI officially adopted MCP through the OpenAI Agents SDK with planned ChatGPT desktop and API integration. This allows ChatGPT users to connect their AI conversations directly to custom applications, databases, and APIs without building complex integrations.

- Microsoft Copilot Studio Adoption: In May 2025, Microsoft released native MCP support in Copilot Studio, offering one-click connections to any MCP server, new tool listings, streaming transport, and comprehensive tracing and analytics. With MCP integration, makers can connect existing knowledge servers and APIs directly from Copilot Studio, with actions and knowledge automatically added through the protocol, positioning MCP as Copilot’s default bridge to external enterprise systems.

- AWS Adoption: Recently, AWS has launched comprehensive MCP support, addressing the M×N integration problem by simplifying custom integrations. AI agents can now interact with AWS services using natural language commands, dramatically simplifying cloud resource management through conversational interfaces.

Why MCP Matters

The motivation behind MCP stems from a simple yet powerful idea: models are only as good as the context we provide them. In the past, AI applications relied on manual input or copy-pasting data to provide context. Today, with MCP, models can directly access and interact with the tools and data sources that matter, making them more powerful, personalized, and efficient.

- Standardization: MCP eliminates fragmentation in AI development by providing a standardized way for AI applications to interact with external systems.

- Interoperability: Once an application is MCP-compatible, it can connect to any MCP server without additional work.

- Scalability: Enterprises can now separate concerns between teams, allowing infrastructure teams to manage data access while application teams focus on building AI solutions.

- Open Ecosystem: MCP is open-source, fostering collaboration and innovation across the AI community.

How MCP Works: A Simple Overview

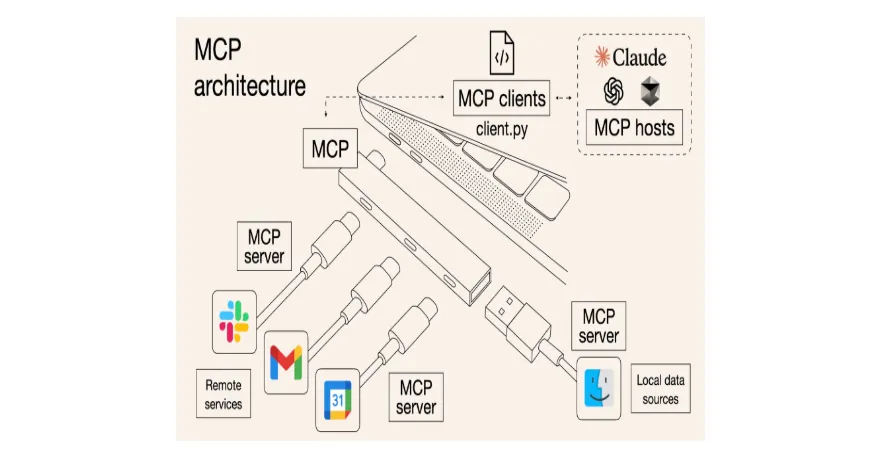

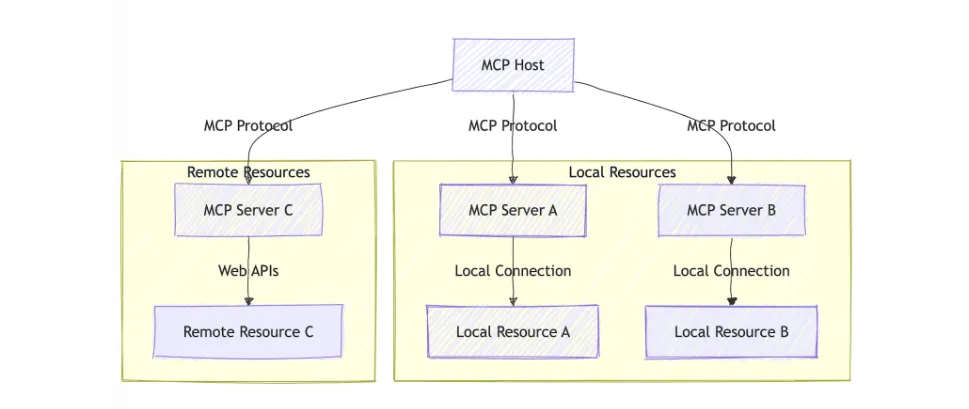

The Model Context Protocol (MCP) is a system that helps AI models access and interact with external data, tools, and services. It follows a client-server architecture, making it easy to integrate AI with different sources of information.

Key Components of MCP

- MCP Servers (Data & Tool Connectors): MCP servers act as bridges between AI and external data sources (e.g., files, databases, SaaS tools like Slack or Notion). They fetch data or perform actions based on standardized commands. Many open-source MCP servers already exist (Google Drive, GitHub, SQL, etc.), so developers can use or customize them instead of building from scratch.

- MCP Clients (AI Applications): AI applications include an MCP client to connect with servers and request data or actions. For example, Claude Desktop has an MCP client that connects to local or network-based servers. Communication happens via JSON-RPC, making it language-agnostic and easy to use.

- Standardized Actions (Primitives): MCP defines three core actions AI can perform through MCP servers: Prompts, Resources, and Tools.

On the client side, two key mechanisms enable AI to interact with servers effectively:

- Roots: Define which data realms an MCP server can access (e.g., specific folders or databases).

- Sampling: Allows AI to generate responses mid-task, aiding in complex workflows (with human oversight recommended).

Security and Access Control in MCP:

As MCP adoption spread, the conversation shifted to security. Developers and enterprises needed confidence that MCP connections would be reliable, permission-aware, and resistant to common risks like prompt injection or unauthorized access. The new security framework addresses these needs with industry-standard measures.

- OAuth 2.1 Authorization: Modernized authorization flow aligned with OAuth 2.1, ensuring compatibility with enterprise authentication systems.

- Resource Indicators: Tokens now tied to specific resources, reducing risk by isolating access between services.

- Granular Access Management: Fine-grained role-based permissions mapped to enterprise security policies for precise control.

MCP Transport Protocol:

Early MCP implementations relied on HTTP and SSE for communication, which worked but had limitations for large-scale workflows. The new transport layer makes interactions faster, more reliable, and better suited for multi-platform environments.

- Streamable HTTP Transport: Transitioned from HTTP + SSE to flexible streaming protocols, enabling more efficient real-time interactions.

- Detailed Tool Annotations: Clear metadata distinguishes read-only and write-capable tools, minimizing accidental misuse.

- Cross-Vendor Compatibility: Joint support across OpenAI, Microsoft, and Anthropic makes MCP a universal interoperability layer.

How Developers Integrate MCP

- Deploy an MCP Server: Run an MCP server for the data source you want AI to access.

- Use an MCP Client: Modify your AI tool to include an MCP client (or use a pre-built one like in Claude Desktop).

- Leverage Open-Source SDKs: Anthropic provides SDKs (e.g., Python) to simplify implementation. Developers can define functions and mark them as resources or tools, with JSON-RPC calls handled in the background.

Developer Tooling & SDKs

MCP provides a rich set of SDKs across multiple languages, making it easier for developers to integrate external tools and workflows. From cloud deployments to productivity automation, MCP bridges data and action seamlessly.

- Multi-language SDKs: Official support for Python, TypeScript, Java, and C# ensures compatibility across diverse development environments.

- AWS Server Integration: Developers can deploy MCP servers within AWS to connect enterprise apps with secure cloud infrastructure.

- GitHub Issues Automation: Use MCP to fetch, create, or update GitHub issues directly within development workflows.

- Slack Workflows: Automate Slack channels by integrating MCP tools for alerts, status updates, and team collaboration.

Why MCP is Developer-Friendly

- Open-source and flexible: Works with any language or environment.

- Pre-built servers and SDKs: Minimize setup time.

- AI models like Claude: Can even assist in writing MCP server code.

With MCP, AI applications become smarter and more useful by seamlessly interacting with external data and tools, making it a powerful addition to any AI-driven workflow.

MCP and AI Agents

One of the most exciting aspects of MCP is its potential to serve as the foundational protocol for AI agents. Agents, which are AI systems capable of autonomous decision-making and task execution, rely heavily on context to function effectively. MCP provides the infrastructure for agents to access tools, retrieve data, and interact with external systems in a standardized way.

For instance, an agent tasked with researching quantum computing can use MCP to:

- Search the web: Using a Brave server.

- Fetch data: From authoritative sources.

- Verify facts: Using a fact-checking agent.

- Generate a report: And save it to a file system.

This composability allows agents to dynamically discover and use new tools and data sources, making them self-evolving. As the AI ecosystem grows, agents will be able to adapt and improve by leveraging new MCP servers without requiring manual updates.

Building an AI-Powered Research Assistant for Financial Analysts Using LangChain and MCP

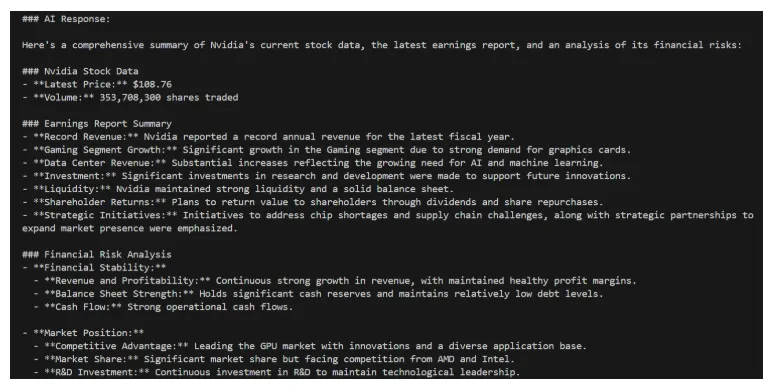

In this post, we’ll develop an AI assistant that automates financial research by integrating multiple AI-driven services using Anthropic’s Model Context Protocol (MCP) with LangChain MCP adapters. This AI agent will streamline financial analysis by:

- Fetching real-time stock market data

- Summarizing financial reports & news articles

- Generating insights and risk assessments

What We’re Building

Our AI-powered research assistant will consist of three specialized MCP servers that will handle different aspects of financial analysis:

- Market Data Retriever: Fetches real-time stock data and key market indicators.

- Financial Report Summarizer: Analyzes financial reports, balance sheets, and earnings calls.

- AI-driven Risk Assessment Tool: To assess risks based on trends, market conditions, and financial reports.

All of these components will be connected via the MultiServerMCPClient, enabling smooth and dynamic workflows.

Required Packages

pip install langchain-openai langchain-mcp-adapters yfinance beautifulsoup4

Set .env file for OPENAI_API_KEY

1. Market Data Retriever MCP Server

This server fetches real-time stock prices, financial indicators, and market trends using the Yahoo Finance API (yfinance).

from mcp.server.fastmcp import FastMCP

import yfinance as yf

mcp = FastMCP("marketdata")

@mcp.tool()

async def get_stock_data(ticker: str) -> dict:

"""Fetch real-time stock market data."""

try:

stock = yf.Ticker(ticker)

data = stock.history(period="1d")

if data.empty:

return {"error": "Invalid stock ticker or no data available."}

return {

"symbol": ticker,

"latest_price": round(data['Close'].iloc[-1], 2),

"volume": int(data['Volume'].iloc[-1])

}

except Exception as e:

return {"error": str(e)}

if __name__ == "__main__":

print("Market Data MCP Server is running...")

mcp.run()

2. Financial Report Summarizer MCP Server

This server summarizes financial reports, news articles, and earnings call transcripts using OpenAI’s GPT model.

from mcp.server.fastmcp import FastMCP

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

load_dotenv() # Load API keys from .env file

mcp = FastMCP("financialsummarizer")

model = ChatOpenAI(model="gpt-4o", verbose=True)

@mcp.tool()

async def summarize_report(report_text: str) -> str:

"""Summarizes a given financial report or news article."""

try:

response = await model.ainvoke([

("system", "Summarize the following financial report in bullet points."),

("human", report_text)

])

return response.content

except Exception as e:

return f"Error: {e}"

if __name__ == "__main__":

print("Financial Summarizer MCP Server is running...")

mcp.run()

3. AI-driven Risk Assessment MCP Server

This tool evaluates market risks, company stability, and potential investment risks using financial sentiment analysis.

from mcp.server.fastmcp import FastMCP

from langchain_openai import ChatOpenAI

from dotenv import load_dotenv

load_dotenv() # Load API keys from .env file

mcp = FastMCP("riskassessment")

model = ChatOpenAI(model="gpt-4o", verbose=True)

@mcp.tool()

async def assess_risk(report_text: str) -> str:

"""Analyzes the risk level of a financial report or market trend."""

try:

response = await model.ainvoke([

("system", "Analyze the financial risk or market trend based on this report."),

("human", report_text)

])

return response.content

except Exception as e:

return f"Error: {e}"

if __name__ == "__main__":

print("Risk Assessment MCP Server is running...")

mcp.run()

4. Connecting Everything with MultiServerMCPClient

Let’s connect our three MCP servers.

import asyncio

import sys

from langchain_mcp_adapters.client import MultiServerMCPClient

from langchain_openai import ChatOpenAI

from langchain.schema import HumanMessage, AIMessage

from dotenv import load_dotenv

load_dotenv() # Load environment variables

model = ChatOpenAI(model="gpt-4o")

python_path = sys.executable # Get Python executable path

async def main():

async with MultiServerMCPClient() as client:

print("Connecting to MCP servers...")

# Connect each MCP server

await client.connect_to_server("marketdata", command=python_path, args=["market_data.py"])

await client.connect_to_server("financialsummarizer", command=python_path, args=["financial_summarizer.py"])

await client.connect_to_server("riskassessment", command=python_path, args=["risk_assessment.py"])

# Create the AI agent

from langgraph.prebuilt import create_react_agent

agent = create_react_agent(model, client.get_tools(), debug=True)

# Request: Get stock data, summarize reports, analyze risks

request = {

"messages": "Get stock data for Nvidia, summarize the latest year's

earnings report, and analyze financial risks."

}

results = await agent.ainvoke(debug=True, input=request)

parsed_data = parse_ai_messages(results)

for message in parsed_data:

print(message)

def parse_ai_messages(data):

messages = dict(data).get('messages', [])

return [f"### AI Response:nn{msg.content}nn" for msg in messages if isinstance(msg, AIMessage)]

if __name__ == "__main__":

asyncio.run(main())

Input

Get stock data for Nvidia, summarize the latest year’s earnings report, and analyze financial risks.

Output

Our financial research assistant that Fetches real-time market data, Summarizes financial reports, Conducts AI-driven risk assessments.

Key Features of MCP

- Sampling: MCP allows servers to request completions (LLM inference calls) from clients, enabling intelligent interactions without requiring the server to host its own LLM.

- Composability: MCP clients can also act as servers, creating hierarchical systems of agents and tools that work together seamlessly.

- Resource Notifications: Servers can notify clients when resources are updated, ensuring that applications always have the latest information.

- Remote Servers: With support for OAuth 2.1 and SSE (Server-Sent Events), MCP enables remotely hosted servers, making it easier to discover and use tools without local installations.

What’s Next for MCP?

The future of MCP is bright, with several exciting developments on the horizon:

- Registry API: A centralized metadata service for discovering and publishing MCP servers, making it easier for developers to find and use tools.

- Well-Known Endpoints: A standardized way for companies to advertise their MCP servers, enabling agents to dynamically discover and use new tools.

- Stateful vs. Stateless Connections: Support for short-lived connections, allowing clients to disconnect and reconnect without losing context.

- Streaming: First-class support for streaming data between servers and clients.

- Proactive Server Behavior: Enabling servers to initiate interactions with clients based on events or deterministic logic.

FAQs:

Can I deploy MCP servers on AWS, Azure, or Google Cloud?

Yes. AWS launched MCP Server in July 2025, with Azure and Google Cloud following. All major cloud platforms now support first-class MCP deployments.

How do I deploy an MCP server in production vs. local development?

Use stdio transport for local testing and HTTP transport for remote production. Consider scalability, security, and monitoring when deploying at enterprise scale.

What’s the difference between MCP and traditional API integrations?

MCP enables structured AI interactions with metadata and context. Unlike raw API calls, it standardizes communication for safer and more predictable AI usage.

Is MCP suitable for enterprise compliance and security requirements?

Yes. MCP includes OAuth 2.1 authorization, role-based access, and resource indicators. These features make it highly aligned with enterprise security practices.

Why MCP is the Future of AI

Struggling with AI system compatibility? MCP isn’t just a protocol, it’s the key to seamless integration between AI applications and external systems, ensuring your AI solutions are more efficient, interoperable, and scalable. With MCP, you can enhance automation, optimize data flow, and build smarter, context-aware AI applications tailored to your business needs.

MCP Integrations for Scalable AI Solutions

At Bluetick Consultants Inc., we help enterprises adopt the Model Context Protocol (MCP) to connect AI tools with business data in a secure and scalable way. From AI-powered automation and cloud-native integrations to cross-platform workflows, our solutions are built to simplify complexity and increase productivity.

Whether it’s setting up servers on AWS or managing enterprise data access, we make MCP adoption simple and aligned with business goals. With a decade of proven experience in AI automation, system integration, and product engineering, Bluetick Consultants ensures every MCP deployment is tailored to your enterprise needs.