Introduction

The desire to infuse machines with the ability to make images, a seemingly simple task for humans, has fueled substantial advances in artificial intelligence. Image generative models have progressed beyond basic outputs, creating lifelike graphics and even artistic renderings. These models use advanced mathematical and computational techniques to analyze the intricate patterns and structures seen in image data, allowing the creation of unique samples that follow the learnt data distribution. This article delves into the underlying mechanics of these models, starting with fundamental principles and progressing to pivotal architectures like Variational Autoencoders (VAEs) and Generative Adversarial Networks (GANs), culminating in a discussion of GANs that use the Wasserstein distance.

Foundations of Image Generation: Learning the Underlying Distribution

At its core, image generation hinges on learning a probability distribution, P(x), over the space of all possible images x. This distribution encapsulates the likelihood of observing any given image. Early approaches relied on simpler statistical models, such as Markov Random Fields, which, while conceptually sound, struggled with the high dimensionality of image data. The advent of deep learning provided the necessary tools to navigate this complexity. Modern generative models typically employ neural networks to learn complex mappings between a latent space z (a lower-dimensional vector space, often assumed to follow a simple distribution like a Gaussian) and the image space x. This mapping, denoted as G(z), transforms a point in the latent space into a corresponding image.

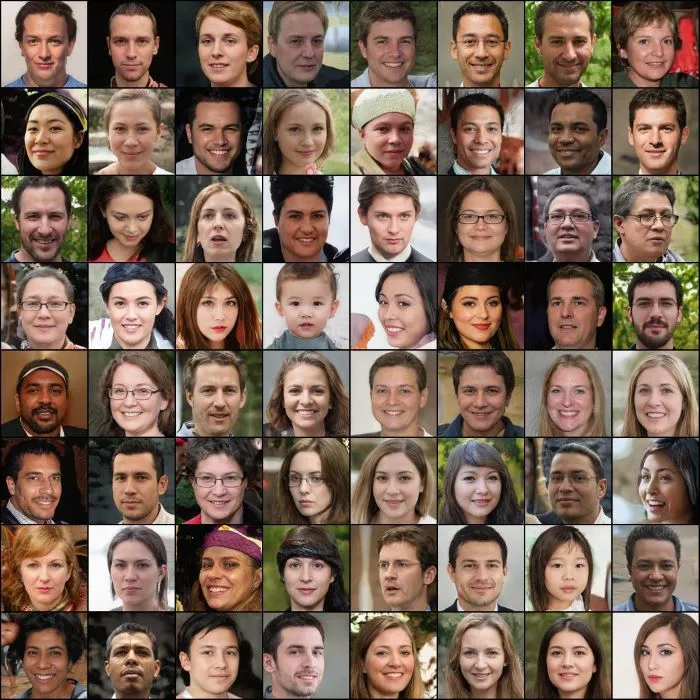

For example, consider generating images of faces. The latent space might encode features like facial structure, skin tone, eye color, and hair style. A specific point in this latent space would correspond to a particular combination of these features, and the generator network would then “decode” this point into a realistic image of a face.

Variational Autoencoders (VAEs): Probabilistic Encoding and Decoding

VAEs introduce a probabilistic framework for learning latent representations. They consist of two primary components: an encoder, q(z|x), and a decoder, p(x|z). The encoder takes an input image x and maps it to a probability distribution in the latent space, typically a Gaussian distribution with mean μ(x) and variance σ²(x). This distribution represents the uncertainty in the latent representation given the input image. The decoder then takes a sample z from this latent distribution and maps it back to the image space, attempting to reconstruct the original image.

The key innovation of VAEs lies in the use of variational inference. Directly computing the true posterior distribution p(z|x) is often intractable. Instead, VAEs learn an approximate posterior distribution q(z|x) by minimizing the Kullback-Leibler (KL) divergence between q(z|x) and a prior distribution p(z) over the latent space (typically a standard Gaussian). This minimization is performed jointly with a reconstruction loss, which measures the difference between the input image x and the reconstructed image p(x|z). The combined loss function, known as the Evidence Lower Bound (ELBO), is given by:

ELBO = E

q(z|x)

[log p(x|z)] − KL(q(z|x)||p(z))

The first term encourages accurate reconstruction, while the second term encourages the learned latent distribution to be close to the prior. This process forces the latent space to be well-structured, allowing for meaningful interpolation between latent vectors and smooth transitions between generated images. However, the inherent averaging effect of the reconstruction loss can lead to blurry generated images, as the model tries to capture the average of many possible reconstructions.

Generative Adversarial Networks (GANs): An Adversarial Game

GANs employ a fundamentally different approach, utilizing a two-network architecture: a generator G(z) and a discriminator D(x). The generator learns to generate synthetic images from random noise z sampled from a latent space, while the discriminator learns to distinguish between real images from the training set and fake images generated by the generator.

These two networks engage in an adversarial game. The generator aims to minimize the probability that the discriminator correctly classifies its generated images as fake, while the discriminator aims to maximize this probability. This can be expressed as a minimax game with the following objective function:

minG maxD V(D, G) = Ex~pdata(x)[log D(x)] + Ez~pz(z)[log(1 – D(G(z)))]

Where pdata(x) is the distribution of real data and pz(z) is the distribution of the latent noise.

Theoretically, this game converges to a Nash equilibrium where the generator produces perfect fakes that the discriminator cannot distinguish from real images, i.e., D(x) = 1/2. However, in practice, training GANs can be challenging due to issues like mode collapse (where the generator only produces a limited variety of images) and vanishing gradients (where the discriminator becomes too good, preventing the generator from learning).

GANs with Wasserstein Distance: Addressing Training Instability

Wasserstein GANs (WGANs) address the training instability of traditional GANs by using the Wasserstein distance (Earth Mover’s distance) as a measure of the difference between the real and generated data distributions. The Wasserstein distance provides a smoother and more meaningful measure compared to the Jensen-Shannon divergence used in traditional GANs.

The key modification in WGANs is replacing the discriminator with a “critic” f(x), which is constrained to be Lipschitz continuous. This constraint ensures that the Wasserstein distance can be accurately estimated. The objective function for WGANs is:

minG maxf∈Lip Ex~pdata(x)[f(x)] – Ez~pz(z)[f(G(z))]

Where Lip denotes the set of Lipschitz continuous functions. In practice, the Lipschitz constraint is often enforced using weight clipping or gradient penalty techniques. The use of the Wasserstein distance results in more stable training, better sample quality, and a loss function that correlates better with sample quality compared to traditional GANs.

Advanced Architectures and Improvements

StyleGAN and Progressive Growing

StyleGAN revolutionized image generation with its style-based generator, enabling precise control over features like pose, texture, and lighting. Its progressive growing technique stabilizes training by incrementally increasing image resolution, learning features hierarchically from coarse to fine. Noise injection at intermediate layers enhances realism and variation. Developers can fine-tune pre-trained StyleGAN models using tools like PyTorch for applications such as synthetic facial data and virtual character creation.

class ProgressiveGenerator(nn.Module):

def __init__(self, latent_dim, initial_resolution=4):

super().__init__()

self.initial = nn.Sequential(

nn.ConvTranspose2d(latent_dim, 512, initial_resolution),

nn.LeakyReLU(0.2)

)

self.progressive_blocks = nn.ModuleList([

# Each block doubles the resolution

ProgressiveBlock(512, 512), # 4x4 -> 8x8

ProgressiveBlock(512, 256), # 8x8 -> 16x16

ProgressiveBlock(256, 128) # 16x16 -> 32x32

])

Conditional Generation

Conditional generation enables models to produce outputs guided by inputs like class labels or textual descriptions. Conditional GANs (cGANs) allow tailored outputs, while models like Pix2Pix excel in transforming image types, such as sketches into photorealistic images. Implementing these models requires annotated datasets and frameworks like TensorFlow for tasks like training cGANs. Applications include augmented reality, personalized marketing, and healthcare, where customization is critical.

Hybrid Approaches

Hybrid architectures, like VAE-GANs and Diffusion-GANs, combine the strengths of different generative models. VAE-GANs blend VAEs’ smooth latent representation with GANs’ high-fidelity output, while Diffusion-GANs enhance diversity and stability by integrating diffusion principles into adversarial training. These hybrids are impactful in fields like medical imaging and creative industries, offering precision and variability. Developers can leverage PyTorch for implementing these models with domain-specific data.

Applications of Image Generative Models: Transforming Industries

Image generative models have revolutionized diverse fields by enabling applications that were previously infeasible or prohibitively complex. These models unlock creative, technical, and functional possibilities across multiple industries.

- Art and Content Creation

Generative models enable artists to craft digital art and illustrations using tools like DALL-E, DeepArt, and Runway ML, turning simple text inputs into stunning visuals or mimicking artistic styles for unique multimedia projects. - Game Development

In gaming, generative models streamline design by creating textures, characters, and expansive environments. Titles like No Man’s Sky employ procedural algorithms and GANs to design realistic NPC faces and vast landscapes efficiently. - Data Augmentation

Generative models enhance machine learning by creating synthetic data for diverse applications. Companies like Waymo use these models to generate varied road scenarios, improving the reliability of autonomous vehicle systems. - Healthcare Applications

Generative models support medical imaging by creating synthetic datasets for training diagnostic AI, ensuring patient privacy. GANs generate high-resolution MRI scans, advancing radiology tools for detecting tumors and anomalies. - Retail and E-commerce

Virtual try-on solutions powered by generative models allow customers to visualize clothing and accessories. Retailers like Zalando leverage these technologies for enhanced online shopping experiences.

Real-World Impact

- Synthetic Faces in Media: Platforms like This Person Does Not Exist produce hyper-realistic faces, reducing reliance on stock photos.

- Image Restoration: Tools like NVIDIA’s DeepFill repair damaged images, aiding preservation and forensics.

- Personalized Experiences: Adobe’s Neural Filters enhance photos with user-specific edits for professionals and hobbyists alike.

Challenges in Image Generative Models

Despite their transformative capabilities, image generative models face several challenges that limit their adoption and raise ethical concerns.

- Training Stability: Adversarial dynamics in GANs can lead to instability and issues like mode collapse.

- Data Quality and Bias: Models can inherit biases, producing outputs with ethical implications in healthcare and law enforcement.

- Ethical Concerns: Generative models risk misuse in creating deepfakes, spreading misinformation, or identity theft.

Future Directions in Image Generative Models

As these models become more efficient, versatile, and accessible, their impact is likely to grow, offering unprecedented opportunities while requiring thoughtful oversight.

- Efficiency Enhancements: Techniques like neural architecture search aim to create faster, lighter models for deployment on edge devices.

- Multimodal Generation: Models like Stable Diffusion are expanding capabilities to combine image, text, audio, and video seamlessly.

- Ethical AI: Developing detection mechanisms and transparent governance frameworks ensures responsible use of generative technologies.

- Domain-Specific Models: Tailored generative models for niche areas, like synthetic biology or architecture, promise expanded applications and optimized performance.

Conclusion

Image generative models have revolutionized the field of computer vision, demonstrating remarkable capabilities in synthesizing realistic and diverse imagery. From the probabilistic framework of VAEs to the adversarial training of GANs, these models have pushed the boundaries of what is possible. The introduction of the Wasserstein distance has further refined GAN training, leading to more stable and effective models. Ongoing research continues to explore new architectures, training techniques, and applications, promising even more exciting advancements in the future.

Ready to unlock the full potential of image-generative models for your business? Contact Bluetick Consultants today and turn your vision into reality.