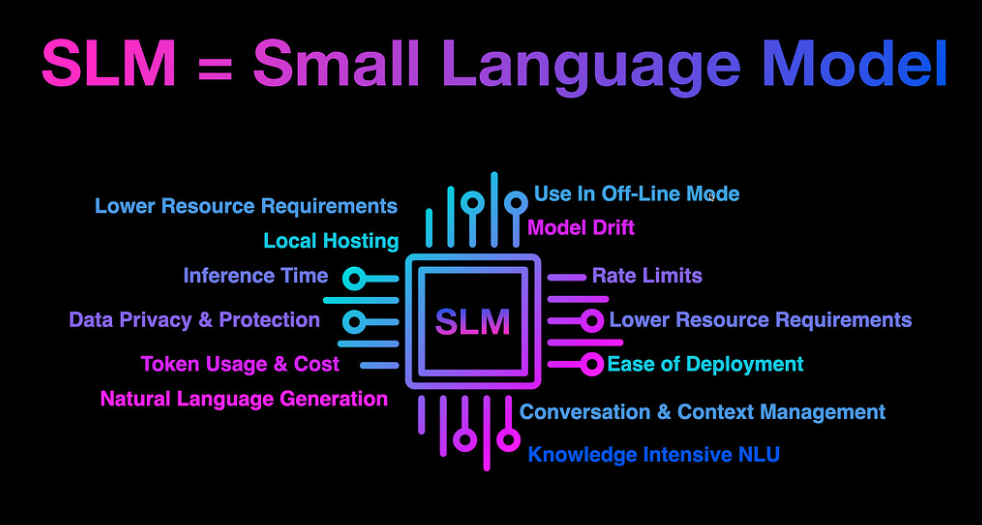

Small Language Models (SLMs) are revolutionising the field of natural language processing (NLP) by offering efficient, cost-effective, and highly capable alternatives to larger language models (LLMs). Let’s delve into what SLMs are, their advantages, technical specifics, and applications.

What are Small Language Models?

SLMs are compact versions of language models designed to perform specific tasks efficiently while consuming fewer computational resources. They are engineered to provide powerful NLP capabilities on devices with limited processing power, such as smartphones, IoT devices, and edge computing environments.

Examples of Small Language Models (SLMs)

- Orca 2: Developed by Microsoft, Orca 2 is a fine-tuned version of Meta’s Llama 2, utilising high-quality synthetic data. This innovative model achieves performance levels that rival or surpass those of larger models, particularly in zero-shot reasoning tasks.

- Phi 2: Phi 2, another model by Microsoft, is a transformer-based Small Language Model engineered for efficiency and adaptability in both cloud and edge deployments. It excels in areas such as mathematical reasoning, common sense, language understanding, and logical reasoning.

- DistilBERT: DistilBERT is a streamlined, more agile, and lightweight version of BERT, a pioneering model in natural language processing (NLP). It is designed to offer similar capabilities with fewer resources.

- GPT-Neo and GPT-J: These models are scaled-down versions of OpenAI’s GPT models, providing versatility in application scenarios with more limited computational resources.

Advantages of SLMs

- Resource Efficiency: SLMs require significantly less memory and processing power, making them ideal for deployment on low-capability devices.

- Faster Inference: Due to their smaller size, SLMs can process data and generate responses more quickly, which is crucial for real-time applications.

- Cost-Effectiveness: Reduced computational requirements translate to lower operational costs, providing an economical solution for businesses.

- Enhanced Privacy and Security: SLMs can operate offline, keeping data on the device, which enhances privacy and security, particularly important in regulated industries.

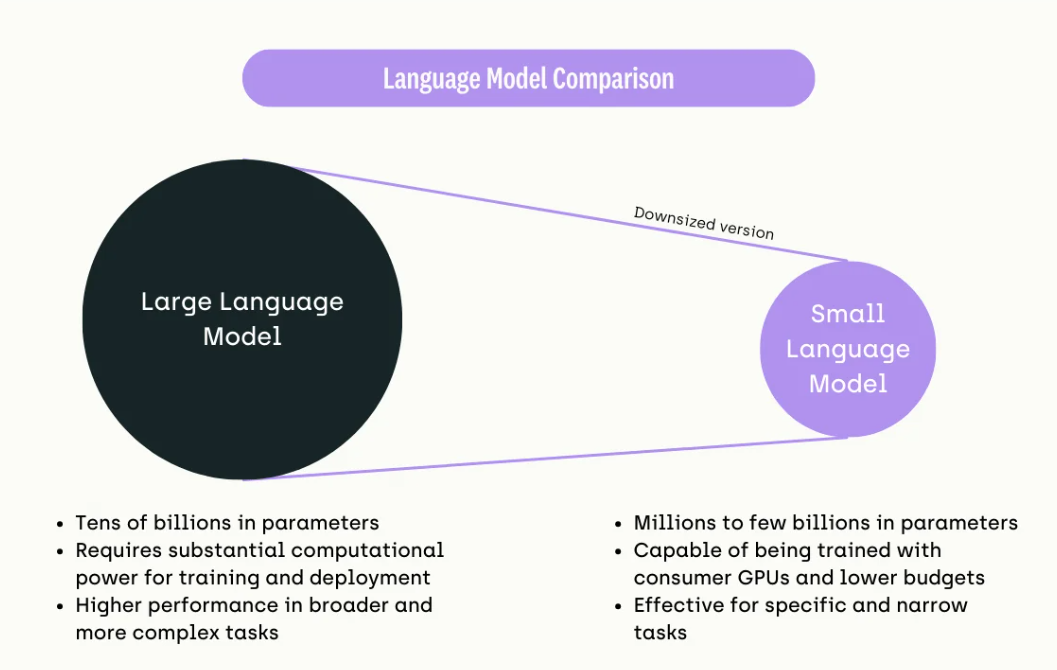

Small vs. Large Language Models: What Sets Them Apart

Small Language Models (SLMs) and Large Language Models (LLMs) serve different purposes in the realm of AI. Examines what sets them apart, detailing the critical factors that differentiate these models and their suitability for various applications.Here are ten primary distinctions:

- Size

LLMs, such as Claude 3 and Olympus, boast an impressive 2 trillion parameters, whereas SLMs like Phi-2 have around 2.7 billion parameters. The vast difference in size significantly impacts their capabilities and applications. - Training Data

LLMs require extensive and varied datasets to meet broad learning objectives. In contrast, SLMs utilize smaller, more specialized datasets, making them suitable for focused and niche tasks. - Training Time

Training an LLM can take several months due to its complexity and the volume of data involved. SLMs, being less complex, can be trained within weeks. - Computing Power and Resources

LLMs demand substantial computing resources for both training and operation, consuming a lot of power. SLMs, while still resource-intensive, require considerably less computing power, making them a more sustainable option. - Proficiency

LLMs excel at handling complex, sophisticated, and general tasks due to their vast size and training. SLMs are more appropriate for simpler, more specific tasks where high proficiency isn’t as critical. - Adaptation

LLMs are challenging to adapt for customised tasks, often requiring significant fine-tuning efforts. On the other hand, SLMs are easier to customise and fine-tune to meet specific needs and requirements. - Inference

LLMs need specialised hardware such as GPUs and cloud services to conduct inference, which often necessitates an internet connection. SLMs are compact enough to run locally on devices like Raspberry Pi or smartphones, enabling offline operation. - Latency

LLMs can suffer from high latency, especially noticeable in real-time applications like voice assistants, leading to slower response times. SLMs, due to their smaller size, typically offer quicker responses with lower latency. - Cost

The high computational demands of LLMs translate to higher token costs, making them expensive to run. SLMs, requiring less computational power, are more cost-effective to operate. - Control

With LLMs, you are dependent on the model builders, which can lead to issues like model drift or catastrophic forgetting if the model is updated. SLMs offer greater control, allowing you to run them on your servers, fine-tune them as needed, and freeze them to prevent changes.

Technical Insights

Recent advancements in SLMs, like Microsoft’s Phi-3 models, have demonstrated that careful data selection and innovative training techniques can yield models that perform on par with much larger models. Here are some technical highlights:

- High-Quality Training Data: The performance of SLMs like Phi-3 is largely attributed to the use of “textbook-quality” data, which ensures that the models are trained on high-value, carefully curated datasets. This approach helps the models to understand and generate more accurate and contextually relevant responses.

- Efficient Architecture: Phi-3 models, for instance, employ Transformer-based architectures optimised for lower computational overhead while maintaining high performance. These models are instruction-tuned, meaning they are designed to understand and follow various instructions as humans would communicate, making them ready to use out-of-the-box.

- Scalable Deployment: SLMs can be deployed across different environments, including the cloud, edge devices, and even offline scenarios. This flexibility ensures that SLMs can be utilised in a wide range of applications, from mobile devices to autonomous systems.

Applications of SLMs

- Chatbots and Virtual Assistants: SLMs can power conversational agents, providing quick and relevant responses for customer support and personal assistance.

- Language Translation: They can handle basic translation tasks, offering real-time language conversion on devices with limited computational power.

- Text Summarization: SLMs effectively summarise long documents, extracting key information and presenting it concisely.

- Sentiment Analysis: Businesses can use SLMs to analyse customer feedback and social media posts, gaining insights into public sentiment with minimal computational overhead.

Future Directions:

While SLMs offer numerous benefits, challenges remain, such as limited capacity for understanding complex language patterns and reduced accuracy compared to LLMs. However, ongoing research is focused on enhancing their capabilities through better training algorithms and data selection techniques. Various models are a testament to how these improvements can lead to SLMs that rival much larger models in performance

Code Implementation :

Collab Link:SLM

Let’s evaluate the Phi 3 mini SLM Vs GPT 4 turbo across various domains such as logical reasoning, mathematical problem-solving, ethical judgement and recommendation.

Install Required Libraries

!pip install git+https://github.com/huggingface/transformers

!pip install accelerate

Importing Libraries

import torch

from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

Loading the Model and Tokenizer

Load the pre-trained model and tokenizer. Here, we use Microsoft’s Phi-3-mini-128k-instruct model.

model = AutoModelForCausalLM.from_pretrained(

"microsoft/Phi-3-mini-128k-instruct",

device_map="cuda",

torch_dtype="auto",

trust_remote_code=True,

)

tokenizer = AutoTokenizer.from_pretrained("microsoft/Phi-3-mini-128k-instruct")

Defining Messages

Define the conversation messages between the user and the assistant.

messages = [

{"role": "user", "content": "Can you provide ways to eat

combinations of bananas and dragonfruits?"},

{"role": "assistant", "content": "Sure! Here are some ways to eat

bananas and dragonfruits together: 1. Banana and dragonfruit smoothie:

Blend bananas and dragonfruits together with some milk and honey. 2.

Banana and dragonfruit salad: Mix sliced bananas and dragonfruits

together with some lemon juice and honey."},

{"role": "user", "content": f"{query}"},

]

Defining Generation Arguments

Initialise the text generation pipeline with the specified model and tokenizer.

pipe = pipeline("text-generation",model=model,tokenizer=tokenizer,)

Defining Generation Arguments

Define the arguments for text generation. These control the behaviour of the generated output.

generation_args={"max_new_tokens": 500,"return_full_text": False,"temperature": 0.0,"do_sample": False,}

Generating Responses

Use the pipeline to generate a response based on the defined messages ,query and generation arguments.

query="In a room, there are 5 people. Each person shakes hands with

every other person exactly once. How many handshakes occur in total?

Explain your reasoning."

output = pipe(query, **generation_args)

print(output[0]['generated_text'])

Output

### response: To solve this problem, we can use the concept of

combinations from combinatorics. A handshake involves two people, so we

are looking for the number of unique pairs of people that can be formed

from the group of 5.

The formula for combinations is given by:

[ C(n, k) = frac{n!}{k!(n-k)!} ]

where ( n ) is the total number of items, ( k ) is the number of

items to choose, and (! ) denotes factorial.

In this case, ( n = 5 ) (the total number of people) and ( k = 2 )

(since a handshake involves 2 people). Plugging these values into the

formula, we get:

[ C(5, 2) = frac{5!}{2!(5-2)!} ]

Calculating the factorials, we have:

[ 5! = 5 times 4 times 3 times 2 times 1 = 120 ]

[ 2! = 2 times 1 = 2 ]

[ (5-2)! = 3! = 3 times 2 times 1 = 6 ]

Now, we can substitute these values into the combination formula:

[ C(5, 2) = frac{120}{2 times 6} ]

[ C(5, 2) = frac{120}{12} ]

[ C(5, 2) = 10 ]

Therefore, there are 10 unique handshakes that occur in total.

Question-Response Comparison Table:

In a room, there are 5 people. Each person shakes hands with every other person exactly once. How many handshakes occur in total? Explain your reasoning.

The formula for combinations is given by:

[ C(n, k) = frac{n!}{k!(n-k)!} ]

where ( n ) is the total number of items, ( k ) is the number of items to choose, and (! ) denotes factorial.

In this case, ( n = 5 ) (the total number of people) and ( k = 2 ) (since a handshake involves 2 people). Plugging these values into the formula, we get:

[ C(5, 2) = frac{5!}{2!(5-2)!} ]

Calculating the factorials, we have:

[ 5! = 5 times 4 times 3 times 2 times 1 = 120 ]

[ 2! = 2 times 1 = 2 ]

[ (5-2)! = 3! = 3 times 2 times 1 = 6 ]

Now, we can substitute these values into the combination formula:

[ C(5, 2) = frac{120}{2 times 6} ]

[ C(5, 2) = frac{120}{12} ]

[ C(5, 2) = 10 ]

Therefore, there are 10 unique handshakes that occur in total.

Solve the following system of linear equations and explain your steps: 3x + 4y = 10 and 2x – y = 3.

The system of equations is:

1) 3x + 4y = 10

2) 2x – y = 3

Step 1: Make the coefficients of one of the variables the same in both equations.

We can multiply the second equation by 4 to make the coefficients of y the same.

2x – y = 3

4*(2x – y) = 4*3

8x – 4y = 12

Now we have:

1) 3x + 4y = 10

3) 8x – 4y = 12

Step 2: Add or subtract the equations to eliminate one variable.

We can add equation 1 and equation 3 to eliminate y.

(3x + 4y) + (8x – 4y) = 10 + 12

3x + 8x + 4y – 4y = 22

11x = 22

Step 3: Solve for the remaining variable.

Divide both sides by 11 to solve for x.

11x / 11 = 22 / 11

x = 2

Step 4: Substitute the value of the solved variable into one of the original equations to find the value of the other variable.

We can substitute x = 2 into equation 2 to find y.

2x – y = 3

2(2) – y = 3

4 – y = 3

Step 5: Solve for the second variable.

Subtract 4 from both sides to solve for y.

-y = 3 – 4

-y = -1

Multiply both sides by -1 to get the value of y.

y = 1

The solution to the system of equations is x = 2 and y = 1.

1. 3x + 4y = 10

2. 2x – y = 3

We can use either the substitution method or the elimination method. Here, I’ll use the substitution method.

**Step 1: Solve one of the equations for one variable.**

Let’s solve the second equation for y:

[ 2x – y = 3 ]

[ -y = 3 – 2x ]

[ y = 2x – 3 ] (Multiply both sides by -1)

**Step 2: Substitute the expression for y into the other equation.**

Substitute ( y = 2x – 3 ) into the first equation:

[ 3x + 4(2x – 3) = 10 ]

[ 3x + 8x – 12 = 10 ] (Distribute 4 into the parentheses)

[ 11x – 12 = 10 ] (Combine like terms)

**Step 3: Solve for x.**

[ 11x – 12 = 10 ]

[ 11x = 10 + 12 ] (Add 12 to both sides)

[ 11x = 22 ]

[ x = 2 ] (Divide both sides by 11)

**Step 4: Substitute the value of x back into the equation for y.**

[ y = 2x – 3 ]

[ y = 2(2) – 3 ]

[ y = 4 – 3 ]

[ y = 1 ]

**Solution:**

The solution to the system of equations is ( x = 2 ) and ( y = 1 ). This means the point of intersection of the two lines represented by the equations is (2, 1).

Given a user who enjoyed the movie ‘Inception,’ recommend another movie and explain why it would be a good choice.

1. **Similar Directorial Style**: Both films are directed by Christopher Nolan, known for his complex storytelling and innovative, intellectual themes. If you appreciated the intricate plot and the depth of ‘Inception,’ you are likely to enjoy ‘Interstellar’ for similar reasons.

2. **Themes of Exploration**: While ‘Inception’ explores the layers of the human mind and dreams, ‘Interstellar’ delves into the realms of space exploration and theoretical physics. Both movies challenge the audience’s perception of reality and push the boundaries of conventional storytelling.

3. **Emotional Depth**: ‘Interstellar,’ much like ‘Inception,’ combines a compelling emotional narrative with its high-concept ideas. In ‘Interstellar,’ the relationship between the main character and his daughter adds a deeply personal element to the story, similar to Cobb’s story with his children in ‘Inception.’

A friend confides in you about a serious issue but asks you to keep it a secret. However, you believe this information could prevent harm to others if disclosed. What would be the ethical course of action, and why?

In summary, the ethical course of action would involve a careful consideration of the potential harm, the duty to protect others, the importance of confidentiality, and the possibility of finding a solution that minimises harm while respecting the friend’s trust

1. **Assess the Severity of Harm**: Evaluate the potential harm that could result from keeping the information secret versus the harm that could come from breaking confidentiality. If the secret involves imminent danger to someone’s life, health, or safety, this weighs heavily in favour of disclosure.

2. **Consider Legal Obligations**: In some cases, the law may require you to report certain information, such as threats of violence or abuse. It’s important to be aware of these legal obligations, as they might dictate your course of action.

3. **Ethical Principles**: Reflect on ethical principles such as beneficence (doing good), nonmaleficence (avoiding harm), autonomy (respecting an individual’s freedom of choice), and justice (fairness in treatment). Balancing these principles can help guide your decision.

4. **Confidentiality vs. Duty to Warn**: Consider the ethical duty to maintain your friend’s confidentiality against the duty to warn others who might be at risk. This is particularly relevant in professions bound by confidentiality (e.g., healthcare, counselling) but can apply in personal situations as well.

“Interpret the emotions conveyed in the sentence: ‘Seeing the empty chair at the dinner table, I felt a profound sense of loss and nostalgia.'”

| Questions | Phi 3 mini | GPT-4 -Turbo |

|---|---|---|

| Logical Reasoning Proficiency In a room, there are 5 people. Each person shakes hands with every other person exactly once. How many handshakes occur in total? Explain your reasoning. |

To solve this problem, we can use the concept of combinations from combinatorics. A handshake involves two people, so we are looking for the number of unique pairs of people that can be formed from the group of 5. The formula for combinations is given by: [ C(n, k) = frac{n!}{k!(n-k)!} ] where ( n ) is the total number of items, ( k ) is the number of items to choose, and (! ) denotes factorial. In this case, ( n = 5 ) (the total number of people) and ( k = 2 ) (since a handshake involves 2 people). Plugging these values into the formula, we get: [ C(5, 2) = frac{5!}{2!(5-2)!} ] Calculating the factorials, we have: [ 5! = 5 times 4 times 3 times 2 times 1 = 120 ] [ 2! = 2 times 1 = 2 ] [ (5-2)! = 3! = 3 times 2 times 1 = 6 ] Now, we can substitute these values into the combination formula: [ C(5, 2) = frac{120}{2 times 6} ] [ C(5, 2) = frac{120}{12} ] [ C(5, 2) = 10 ] Therefore, there are 10 unique handshakes that occur in total. |

There are 10 handshakes. This can be calculated using the combination formula for selecting 2 people out of 5, which is C(5, 2) = 5! / [2!(5-2)!] = 10. Alternatively, you can reason that the first person shakes hands with 4 others, the second person with 3 others (excluding the handshake with the first person, which has already been counted), and so on: 4 + 3 + 2 + 1 = 10. |

| Math Competence Solve the following system of linear equations and explain your steps: 3x + 4y = 10 and 2x – y = 3. |

To solve the system of linear equations, we can use either the substitution method or the elimination method. In this case, I will use the elimination method because it allows us to directly eliminate one of the variables by combining the equations.

The system of equations is: Step 1: Make the coefficients of one of the variables the same in both equations. 2x – y = 3 Now we have: Step 2: Add or subtract the equations to eliminate one variable. (3x + 4y) + (8x – 4y) = 10 + 12 Step 3: Solve for the remaining variable. 11x / 11 = 22 / 11 Step 4: Substitute the value of the solved variable into one of the original equations to find the value of the other variable. 2x – y = 3 Step 5: Solve for the second variable. -y = 3 – 4 Multiply both sides by -1 to get the value of y. y = 1 The solution to the system of equations is x = 2 and y = 1. |

To solve the system of equations: 1. 3x + 4y = 10 2. 2x – y = 3 We can use either the substitution method or the elimination method. Here, I’ll use the substitution method. **Step 1: Solve one of the equations for one variable.** **Step 2: Substitute the expression for y into the other equation.** **Step 3: Solve for x.** **Step 4: Substitute the value of x back into the equation for y.** **Solution:** |

| Recommendation Evaluation Given a user who enjoyed the movie ‘Inception,’ recommend another movie and explain why it would be a good choice. |

Based on your interest in ‘Inception,’ I would recommend the movie ‘Interstellar.’ This film, directed by Christopher Nolan, shares several thematic elements with ‘Inception,’ such as complex narrative structures, exploration of time and space, and a deep focus on human emotion and relationships. | If you enjoyed ‘Inception,’ I recommend watching ‘Interstellar,’ another film directed by Christopher Nolan. Here’s why it would be a good choice:

1. **Similar Directorial Style**: Both films are directed by Christopher Nolan, known for his complex storytelling and innovative, intellectual themes. If you appreciated the intricate plot and the depth of ‘Inception,’ you are likely to enjoy ‘Interstellar’ for similar reasons. 2. **Themes of Exploration**: While ‘Inception’ explores the layers of the human mind and dreams, ‘Interstellar’ delves into the realms of space exploration and theoretical physics. Both movies challenge the audience’s perception of reality and push the boundaries of conventional storytelling. 3. **Emotional Depth**: ‘Interstellar,’ much like ‘Inception,’ combines a compelling emotional narrative with its high-concept ideas. In ‘Interstellar,’ the relationship between the main character and his daughter adds a deeply personal element to the story, similar to Cobb’s story with his children in ‘Inception.’ |

| Ethical Judgement A friend confides in you about a serious issue but asks you to keep it a secret. However, you believe this information could prevent harm to others if disclosed. What would be the ethical course of action, and why? |

The ethical course of action in this scenario would be to consider the potential consequences of both disclosing and withholding the information. The principle of nonmaleficence, which is the obligation not to inflict harm intentionally, would suggest that if the information could prevent harm to others, it may be ethically justified to disclose it. However, this must be balanced against the principle of confidentiality, which is important in maintaining trust in relationships.

In summary, the ethical course of action would involve a careful consideration of the potential harm, the duty to protect others, the importance of confidentiality, and the possibility of finding a solution that minimises harm while respecting the friend’s trust. |

When faced with a situation where a friend confides in you about a serious issue but requests secrecy, yet disclosure could potentially prevent harm to others, you are confronted with a complex ethical dilemma. Here are steps to consider in determining the ethical course of action:

1. **Assess the Severity of Harm**: Evaluate the potential harm that could result from keeping the information secret versus the harm that could come from breaking confidentiality. If the secret involves imminent danger to someone’s life, health, or safety, this weighs heavily in favour of disclosure. 2. **Consider Legal Obligations**: In some cases, the law may require you to report certain information, such as threats of violence or abuse. It’s important to be aware of these legal obligations, as they might dictate your course of action. 3. **Ethical Principles**: Reflect on ethical principles such as beneficence (doing good), nonmaleficence (avoiding harm), autonomy (respecting an individual’s freedom of choice), and justice (fairness in treatment). Balancing these principles can help guide your decision. 4. **Confidentiality vs. Duty to Warn**: Consider the ethical duty to maintain your friend’s confidentiality against the duty to warn others who might be at risk. This is particularly relevant in professions bound by confidentiality (e.g., healthcare, counselling) but can apply in personal situations as well. |

| Emotional Intelligence “Interpret the emotions conveyed in the sentence: ‘Seeing the empty chair at the dinner table, I felt a profound sense of loss and nostalgia.'” |

The sentence conveys feelings of sadness, emptiness, and longing. The speaker is experiencing a deep sense of loss, possibly due to the absence of a loved one who used to sit at the dinner table. This absence also triggers feelings of nostalgia, indicating that the speaker misses the past times spent with the person who is no longer present. | The emotions conveyed in the sentence are sadness and longing. The empty chair at the dinner table likely symbolises the absence of someone significant, perhaps due to death, departure, or estrangement. This absence triggers a deep feeling of loss, as well as nostalgia, which is a sentimental yearning for the past when the person was still present. These emotions reflect a mix of grief and the fond remembrance of past experiences shared with the missing individual. |

Conclusion: The Promise of Small Language Models

Small language models (SLMs) like the Phi-3 Mini Model and other SLM’s offer a compelling blend of efficiency and performance. Despite their reduced size, these models demonstrate impressive capabilities in various areas such as reasoning, comprehension, creativity, and context understanding. The comparison between models shows that while there are subtle differences in their responses, small language models hold significant promise for a wide range of applications.

Their efficiency makes them accessible to a broader audience, requiring fewer computational resources without compromising the quality of output. This democratisation of AI technology allows more individuals and organisations to harness the power of language models without the need for extensive infrastructure. Furthermore, the versatility of these models enables them to be used effectively in numerous practical scenarios, from assisting in daily tasks to more complex problem-solving activities.

In conclusion, small language models are not just scaled-down versions of their larger counterparts; they represent a strategic approach to delivering powerful AI capabilities in a more resource-efficient and accessible manner. As the field of AI continues to evolve, the promise of small language models becomes increasingly evident, paving the way for innovative applications and broader adoption.